Inside the Colossus: How NVIDIA and xAI Teamed Up to Build a Supercomputer for Grok 3

NVIDIA provides technology beyond GPUs. In building Colossus, xAI partnered with NVIDIA to utilize their high-end networking and software solutions to build & manage the worlds largest data center.

Imagine a giant digital brain, built to think faster and smarter than anything we’ve seen before. That’s what xAI, the AI company started by Elon Musk, set out to create with their Colossus data center. This massive supercomputer powers Grok 3, the latest version of their truth-seeking AI chatbot. But xAI didn’t do it alone—they had a powerhouse partner in NVIDIA, a company famous for its cutting-edge tech. NVIDIA didn’t just supply the raw muscle with their H100 GPUs; they brought a whole toolkit, including the Spectrum-X Ethernet and likely their NVIDIA AI Enterprise platform, to make Colossus a reality. Let’s break it down in simple terms and see what the big shots at xAI and NVIDIA have to say about it.

The H100 GPUs: The Heart of Colossus

At the core of Colossus are 100,000 NVIDIA H100 GPUs—think of them as super-fast calculators designed specifically for AI. These GPUs (short for Graphics Processing Units) are like the engines that crunch massive amounts of data to teach Grok 3 how to understand the world. Each one can handle billions of calculations per second, making them perfect for training an AI that needs to analyze tons of text, images, and more. xAI started with 100,000 of these, and they’re already planning to double that to 200,000, mixing in even faster H200 GPUs.

Elon Musk himself called it out: “Colossus is the most powerful AI training system in the world. Nice work by xAI team, NVIDIA and our many partners/suppliers.” That’s a big claim, but the H100s are a big reason why. They’re built to handle the heavy lifting of AI training, where the system learns by processing data over and over until it gets smarter. Without these GPUs, Grok 3 wouldn’t have the horsepower to aim for being “the smartest AI on Earth,” as Musk and xAI’s team described it in a recent livestream.

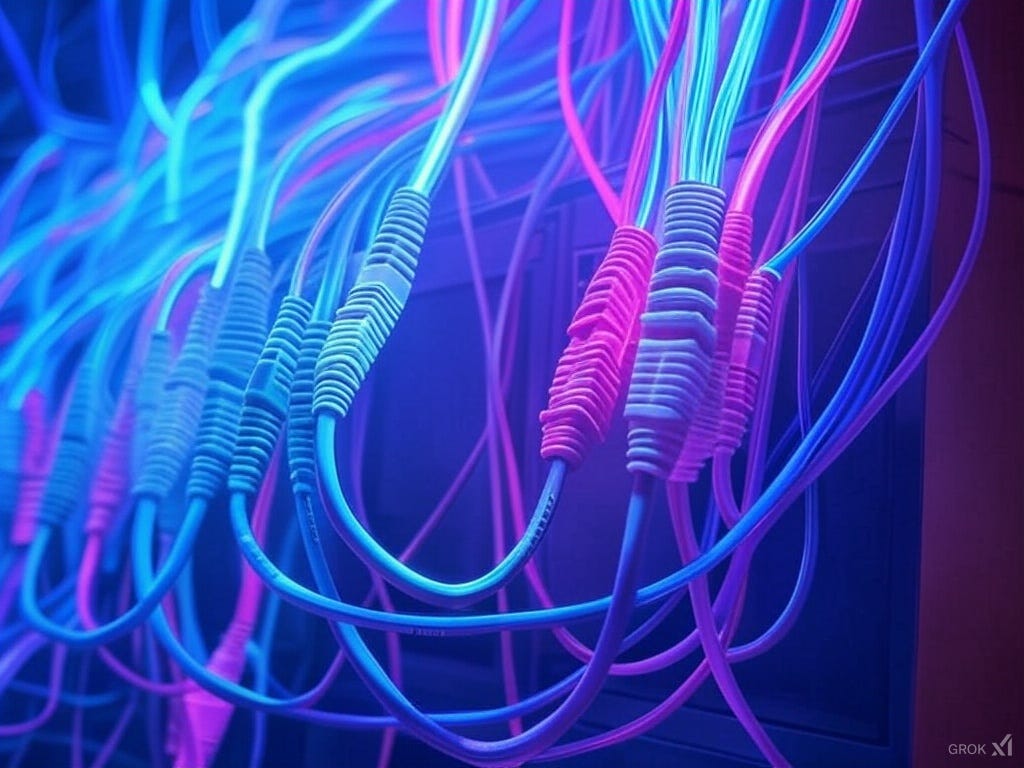

Spectrum-X Ethernet: The Superhighway for Data

But raw power isn’t enough—those GPUs need to talk to each other fast. That’s where NVIDIA’s Spectrum-X Ethernet comes in. Picture a busy city with thousands of cars (the GPUs) trying to share information. Without good roads, you’d get traffic jams, and everything would slow down. Spectrum-X is like a superhighway that keeps data moving smoothly between all 100,000 GPUs. It’s a networking system that uses Ethernet—a common way computers connect—but turbocharges it for AI workloads.

NVIDIA says Spectrum-X delivers “95% data throughput with zero latency degradation or packet loss,” which is a fancy way of saying it keeps everything running fast and without hiccups. Standard Ethernet might clog up with “flow collisions” (like car crashes on a road), but Spectrum-X avoids that with smart tricks like congestion control and adaptive routing. This is a big deal because, as an xAI spokesperson put it, “NVIDIA’s Hopper GPUs and Spectrum-X allow us to push the boundaries of training AI models at a massive scale, creating a super-accelerated and optimized AI factory.” In other words, Spectrum-X makes sure Grok 3’s training doesn’t get stuck in traffic.

Gilad Shainer, NVIDIA’s Senior VP of Networking, added, “The NVIDIA Spectrum-X Ethernet networking platform is designed to provide innovators such as xAI with faster processing, analysis and execution of AI workloads.” For the layperson, that means it’s all about speed and efficiency—keys to getting Grok 3 ready faster.

NVIDIA AI Enterprise: The Brain Behind the Operation?

While NVIDIA and xAI haven’t explicitly confirmed it in every press release, it’s likely that the NVIDIA AI Enterprise platform played a role too. Think of this as the conductor of an orchestra, making sure all the GPUs and networks play in harmony. It’s a software package that helps companies manage giant AI projects—everything from setting up the training process to keeping the system running smoothly. For a project as huge as Colossus, which went from an empty factory to a working supercomputer in just 122 days, this kind of software could be a game-changer.

NVIDIA AI Enterprise simplifies the tricky stuff—like splitting up tasks across thousands of GPUs or fixing problems when they pop up. It’s built to work with NVIDIA’s hardware, like the H100s and Spectrum-X, so it’s a natural fit. While we don’t have a direct quote tying it to Colossus, NVIDIA’s own descriptions call it “an end-to-end, secure, cloud-native suite of AI software,” perfect for “accelerating the development and deployment of AI.” That sounds like exactly what xAI needed to get Grok 3 off the ground so fast.

Why It Matters: A Team Effort for a Smarter AI

The collaboration between NVIDIA and xAI isn’t just about piling up tech—it’s about building something groundbreaking. The H100 GPUs give Colossus its muscle, Spectrum-X keeps the data flowing, and NVIDIA AI Enterprise (if used) ties it all together. Together, they’ve created what Musk calls “the most powerful training system in the world,” and they did it in record time—122 days from start to finish, with training starting just 19 days after the first racks arrived.

For us regular folks, this means Grok 3 could soon be answering our questions with unmatched smarts, thanks to a supercomputer that’s more than the sum of its parts. As an xAI spokesperson summed it up, “xAI has built the world’s largest, most-powerful supercomputer. NVIDIA’s Hopper GPUs and Spectrum-X allow us to push the boundaries of training AI models at a massive scale.” And with plans to double Colossus to 200,000 GPUs, the future looks even bigger.

So, next time you chat with Grok 3, remember: it’s not just Elon’s vision or xAI’s grit—it’s also NVIDIA’s tech wizardry making it happen, one GPU, network, and software trick at a time.